Stay informed with our daily and weekly newsletters to stay up to date with the latest developments and exclusive content in the world of AI. Learn More

Microsoft made a significant announcement at the Ignite developer conference today, introducing two new chips tailored for its data center infrastructure: the Azure Integrated HSM and the Azure Boost DPU. Set to be launched in the near future, these custom chips aim to address security and efficiency challenges faced by existing data centers, enhancing their servers for handling large-scale AI workloads. This unveiling comes on the heels of Microsoft’s recent release of the Maia AI accelerators and Cobalt CPUs, showcasing the company’s commitment to optimizing every layer of its technology stack, from silicon to software, to support advanced AI applications.

The company, led by Satya Nadella, also outlined new strategies to manage power consumption and heat emissions in data centers, as concerns grow over the environmental impact of AI-driven data centers. A recent report by Goldman Sachs predicts that advanced AI workloads could lead to a 160% surge in data center power demand by 2030, with these facilities potentially consuming 3-4% of global power by the end of the decade.

The new chips

While Microsoft continues to utilize top-tier hardware from industry leaders like Nvidia and AMD, the company has been pushing boundaries with its custom-designed chips. Last year, at Ignite, Microsoft unveiled the Azure Maia AI accelerator, optimized for AI tasks and generative AI, as well as the Azure Cobalt CPU, an Arm-based processor tailored for general-purpose compute workloads on the Microsoft Cloud.

Building on this foundation, Microsoft has expanded its custom silicon portfolio with a specific emphasis on security and efficiency. The new Azure Integrated HSM chip features a dedicated hardware security module designed to meet FIPS 140-3 Level 3 security standards. According to Omar Khan, Vice President for Azure Infrastructure Marketing, this module enhances key management to ensure encryption and signing keys remain secure within the chip, without compromising performance or increasing latency.

The Azure Integrated HSM leverages specialized hardware cryptographic accelerators to enable secure, high-performance cryptographic operations directly within the chip’s isolated environment. Unlike traditional HSM architectures that require network communication or key extraction, this chip performs encryption, decryption, signing, and verification operations entirely within its dedicated hardware boundary.

On the other hand, the Azure Boost DPU (data processing unit) is designed to optimize data centers for handling highly multiplexed data streams associated with millions of network connections, with a focus on power efficiency. This offering, a first of its kind from Microsoft, consolidates multiple components of a traditional server into a single piece of silicon, including high-speed Ethernet and PCIe interfaces, network and storage engines, data accelerators, and security features.

Employing a sophisticated hardware-software co-design, a custom lightweight data-flow operating system enables higher performance, lower power consumption, and enhanced efficiency compared to conventional implementations. Microsoft anticipates that the Azure Boost DPU will run cloud storage workloads at three times less power and four times the performance of existing CPU-based servers.

New approaches to cooling, power optimization

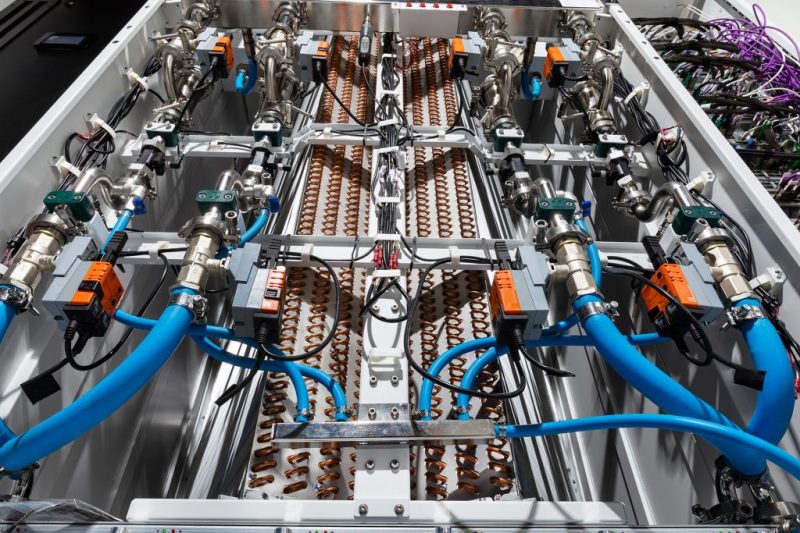

In addition to the new chips, Microsoft also introduced advancements in data center cooling and power optimization. The company unveiled an enhanced version of its heat exchanger unit—a liquid cooling ‘sidekick’ rack. While specific gains from this technology were not disclosed, it can be retrofitted into Azure data centers to manage heat emissions from large-scale AI systems utilizing AI accelerators and power-intensive GPUs like those from Nvidia.

On the energy management front, Microsoft collaborated with Meta on a new disaggregated power rack aimed at enhancing flexibility and scalability. Each disaggregated power rack will feature 400-volt DC power, enabling up to 35% more AI accelerators in each server rack and allowing dynamic power adjustments to accommodate varying demands of AI workloads.

Microsoft plans to open-source the cooling and power rack specifications for the industry through the Open Compute Project. Additionally, the company intends to incorporate Azure Integrated HSMs into every new data center server starting next year. However, the timeline for the DPU roll-out remains uncertain at this stage.

Microsoft Ignite will continue until November 22, 2024.