On November 6, 2025, a groundbreaking new technique was introduced that could potentially revolutionize the way we understand and interpret brain activity. The technique, known as ‘mind captioning,’ has the ability to generate descriptive sentences based on a person’s visual thoughts or mental images by analyzing their brain activity. This cutting-edge technology has the potential to not only decode the brain’s interpretation of the world but also aid individuals with language difficulties, such as those caused by strokes, in expressing themselves more effectively.

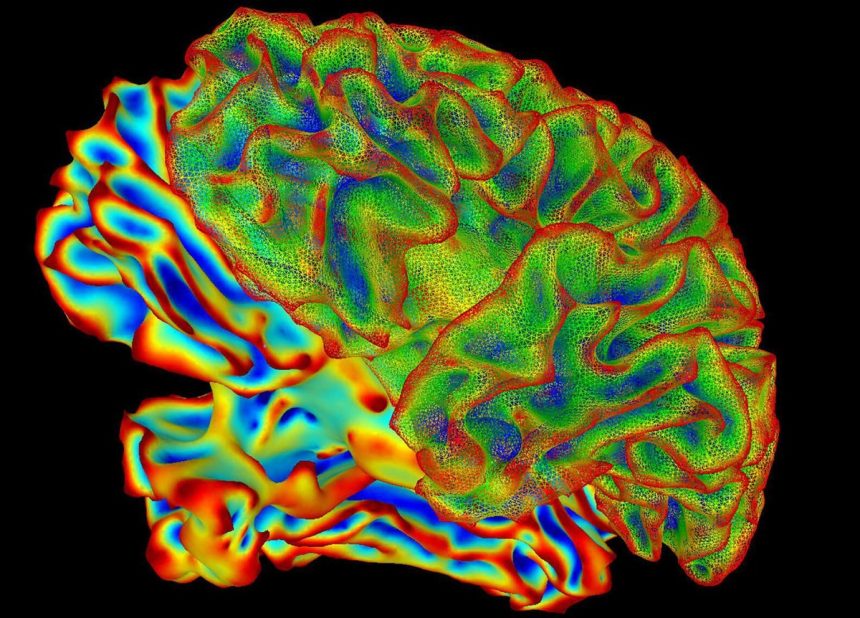

The innovative method was detailed in a recent paper published in Science Advances, shedding light on how the brain processes and represents visual information before it is articulated into words. Researchers have long been able to predict what a person is seeing or hearing using brain activity recordings, but deciphering complex content like videos or abstract shapes has proven to be a challenging task.

The new technique developed by computational neuroscientist Tomoyasu Horikawa involves using artificial intelligence models to analyze brain scans of participants while they watch videos. By matching the brain activity patterns to unique numerical ‘meaning signatures’ derived from text captions of the videos, the AI model can accurately predict and generate descriptive sentences that closely resemble the content being viewed.

For instance, when a participant watched a video of a person jumping from a waterfall, the AI model was able to generate descriptive phrases like “a person jumps over a deep water fall on a mountain ridge” based on the individual’s brain activity. Additionally, the technique proved successful in generating descriptions of participants’ recollections of video clips, indicating a similar brain representation for both viewing and remembering.

The non-invasive functional magnetic resonance imaging technology used in this method holds promise for improving brain-computer interfaces that could directly translate non-verbal mental representations into text. This advancement could greatly benefit individuals with communication difficulties by providing them with a means to express their thoughts and ideas effectively.

While the potential applications of this technology are vast, concerns about mental privacy have been raised. As researchers come closer to revealing intimate thoughts and emotions through brain activity decoding, there is a need to ensure ethical practices and consent from participants. Researchers like Alex Huth emphasize the importance of respecting privacy and ensuring that these techniques are used responsibly.

In conclusion, the development of ‘mind captioning’ represents a significant leap forward in our understanding of brain activity and its potential applications in enhancing communication and cognitive science. As this field continues to evolve, it is crucial to prioritize ethical considerations and safeguard mental privacy while harnessing the power of innovative technologies for the betterment of society.