The use of generative artificial intelligence for medical diagnoses is becoming increasingly popular, with people turning to AI models like ChatGPT for answers about their health concerns. But how accurate are these AI-generated diagnoses? A recent study published in the journal iScience aimed to test the capabilities of ChatGPT in the biomedical field and shed light on its accuracy.

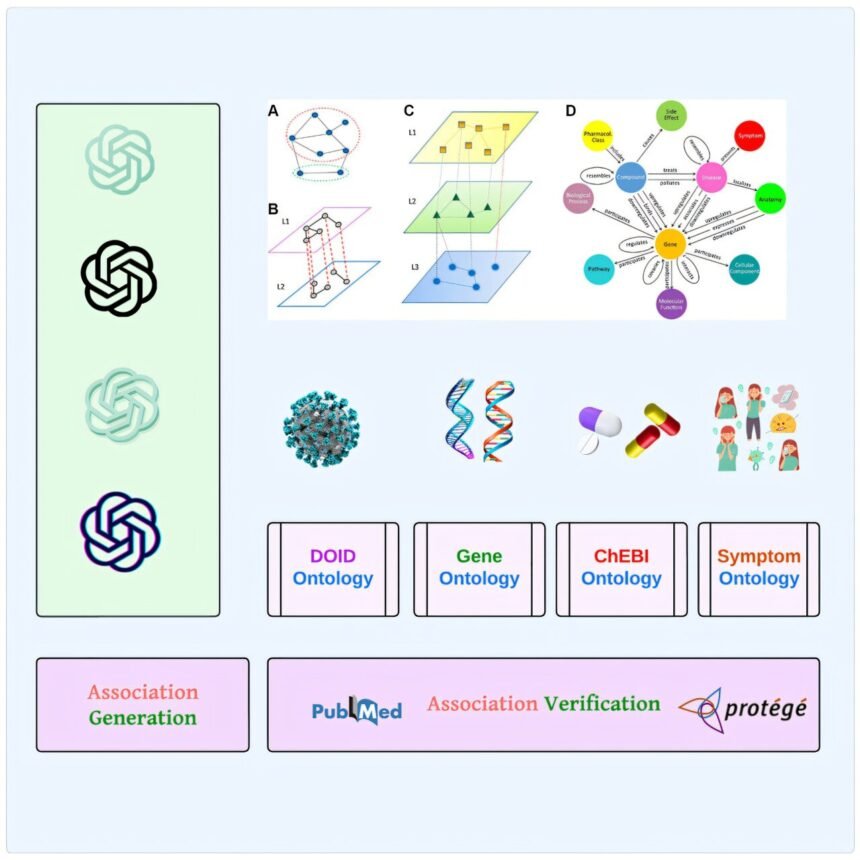

Led by Ahmed Abdeen Hamed, a research fellow at Binghamton University, the study involved testing ChatGPT’s ability to identify disease terms, drug names, genetic information, and symptoms. Surprisingly, the AI showed high accuracy in identifying disease terms (88–97%), drug names (90–91%), and genetic information (88–98%), exceeding the researchers’ expectations.

However, when it came to identifying symptoms, ChatGPT’s accuracy was lower (49–61%). One possible reason for this discrepancy is the difference in language used by healthcare professionals and the general public. While medical professionals use formal terminology, users interacting with ChatGPT may use more informal language, leading to challenges in accurate symptom identification.

One notable issue that the researchers encountered was ChatGPT’s tendency to “hallucinate” when asked for specific genetic information. For example, when asked for GenBank accession numbers, ChatGPT would generate fake numbers instead of providing accurate data. This highlights a major limitation of the AI model that needs to be addressed for improved accuracy.

Despite these challenges, Hamed sees potential in integrating biomedical ontologies into large language models like ChatGPT to enhance accuracy and reduce errors. By addressing these knowledge gaps and hallucination issues, AI models can be further improved for medical diagnosis and decision-making.

Hamed’s research underscores the importance of identifying and rectifying flaws in AI models to ensure the reliability of medical information provided to users. By addressing these issues, data scientists can enhance the performance of AI models like ChatGPT and make them more trustworthy tools for healthcare professionals and the general public.