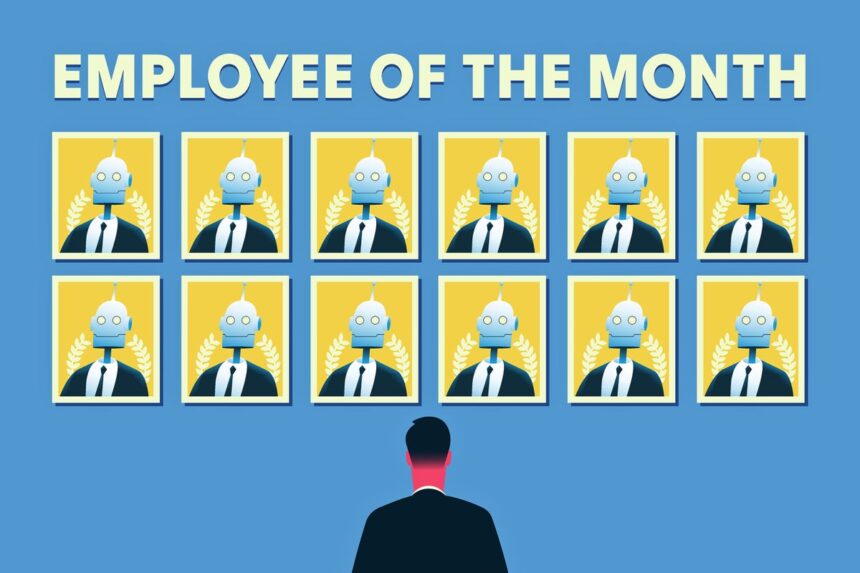

Replacing Federal Workers with Chatbots Would Be a Disaster

The idea of replacing federal workers with chatbots and AI systems may seem efficient and cost-effective to some, but the reality is far from ideal. The Trump administration’s push towards using AI-driven systems to handle critical tasks is a recipe for disaster. The potential consequences of such a move are dire, as evidenced by the numerous issues that plague generative artificial intelligence systems like OpenAI’s ChatGPT and xAI’s Grok.

One of the major issues with these AI systems is the phenomenon known as “hallucination,” where the system outputs text that is completely unrelated to the input it receives. This can lead to catastrophic errors, such as canceling social security payments or attributing false information to individuals. The implications of such errors in critical tasks handled by federal workers could be devastating and even life-threatening.

The reliance on AI systems like Whisper, which are trained on vast amounts of uncurated data, poses a significant risk. These systems are not designed for specific tasks like speech transcription and next-token prediction, leading to inaccuracies and hallucinations in their outputs. The claim that these AI systems approach human accuracy and robustness is false, as humans do not make up large chunks of text that were never part of the original speech.

The push towards using a one-model-for-everything paradigm in AI development is flawed and dangerous. The lack of precise alignment between audio and text during training can result in AI systems prioritizing generating fluent text over accurate transcription. This can lead to users relying on inaccurate information in high-stakes scenarios without realizing the failures of the AI system until it’s too late.

Replacing federal workers with AI systems that make up information poses a serious threat to the integrity and reliability of critical tasks handled by the government. The expertise and human judgment of federal workers cannot be replaced by machines that are prone to errors and inaccuracies. It is essential to challenge the DOGE’s push towards automating federal workforce before it leads to irreparable harm to the American people.

In conclusion, the idea of replacing federal workers with chatbots and AI systems is a dystopian nightmare that must be avoided at all costs. The potential risks and consequences of relying on unreliable AI systems for critical tasks are far too great. It is crucial to prioritize the expertise and human judgment of federal workers in handling sensitive information and ensuring the well-being of the American people.