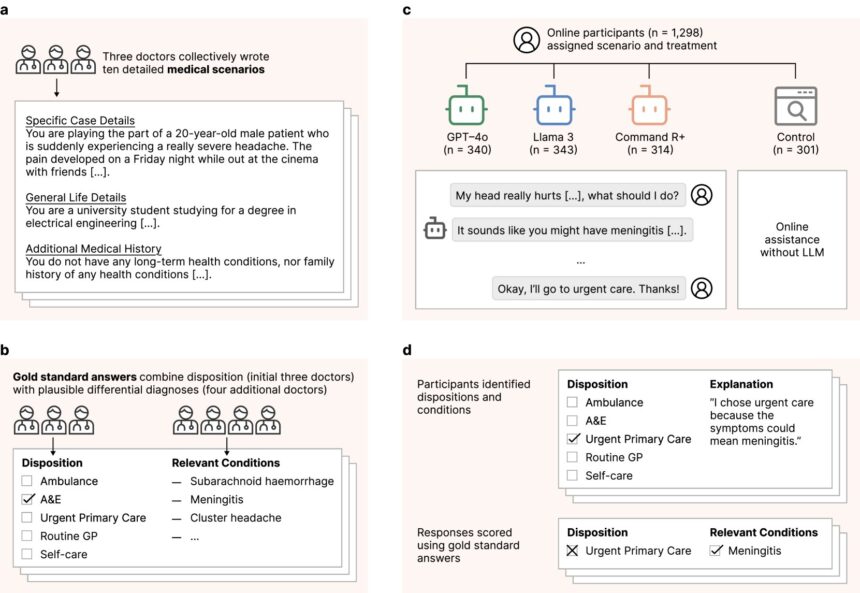

A recent study conducted by a team of AI and medical researchers from the U.K. and the U.S. aimed to test the accuracy of medical advice provided by Language Model Models (LLMs) through chatbots. The researchers asked 1,298 volunteers to seek medical advice from chatbots and compared the results with advice from other online sources or the user’s own common sense.

Seeking medical advice from a chatbot has become a popular alternative to visiting a doctor due to its convenience and accessibility. However, the study sought to investigate how reliable this advice truly is. Previous research has shown that AI apps can perform well on medical benchmarks, but little work has been done to assess their real-world accuracy.

The volunteers were randomly assigned to use an AI chatbot or consult their usual resources, such as internet searches or personal knowledge, when faced with a medical issue. The researchers then analyzed the accuracy of the advice provided by the chatbots compared to the control group.

The study revealed that many volunteers failed to provide complete information during their interactions with the chatbots, leading to communication breakdowns. When comparing the chatbot’s advice with other sources, such as online medical sites or the volunteer’s intuition, the researchers found mixed results. In some cases, the advice given by the chatbots was similar, while in others, it was less accurate.

Furthermore, the study found instances where the use of chatbots led to misidentification of ailments and underestimation of the severity of health problems. As a result, the researchers recommend relying on more reliable sources for medical advice.

In conclusion, the study highlights the limitations of chatbots in providing accurate medical advice and emphasizes the importance of consulting trusted sources for healthcare information. For more information, the study titled “Clinical knowledge in LLMs does not translate to human interactions” can be accessed on the arXiv preprint server with DOI: 10.48550/arxiv.2504.18919.