The Ethical Implications of AI-Powered Crime Prediction Tools

Simone Rotella

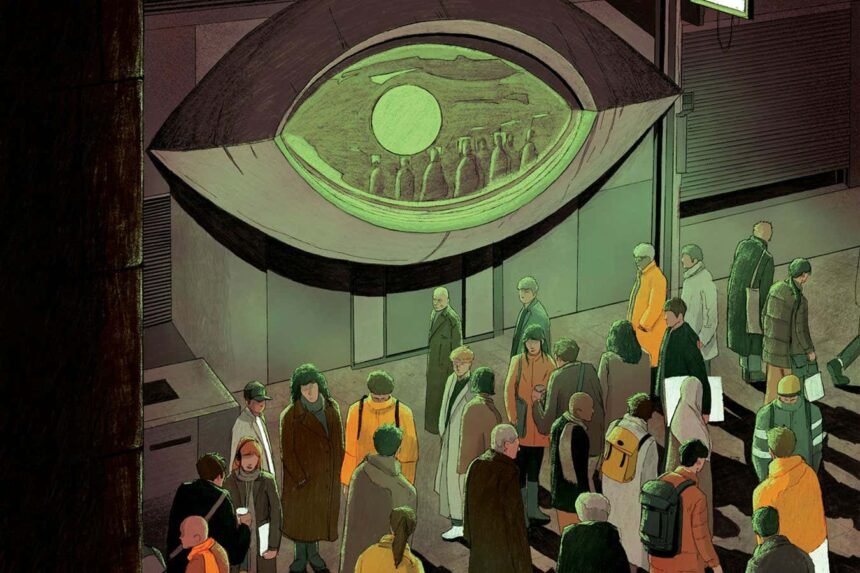

The UK government’s latest initiative to implement an AI-powered crime prediction tool has sparked controversy and raised important ethical questions. This tool is designed to identify individuals considered “high risk” for future violent behavior based on personal data such as mental health history and addiction.

Similar efforts are underway in other countries as well. Argentina has recently established an Artificial Intelligence Unit for Security, which aims to leverage machine learning for crime prediction and real-time surveillance. In Canada, various police forces, including those in Toronto and Vancouver, utilize predictive policing techniques and tools like Clearview AI facial recognition software. Additionally, some cities in the United States have started using AI facial recognition technology in conjunction with street surveillance to track potential suspects.

While the prospect of preemptively preventing violence, reminiscent of the movie Minority Report, is intriguing, it also raises significant concerns…