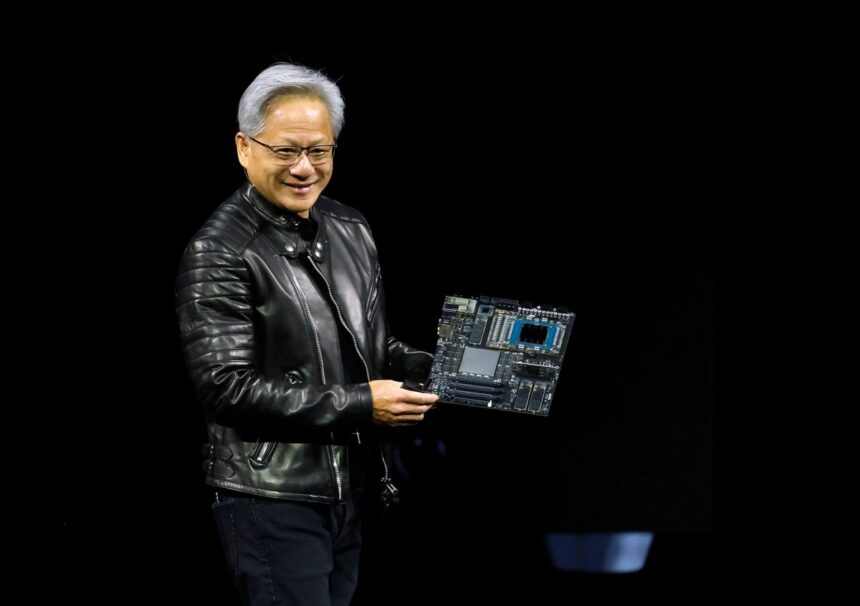

Nvidia Unveils New AI Models and Infrastructure for Robotics Developers

Nvidia recently announced a groundbreaking set of new world AI models, libraries, and infrastructure for robotics developers. One of the highlights of this release is Cosmos Reason, a 7-billion-parameter “reasoning” vision language model designed for physical AI applications and robots.

Joining the existing lineup of Cosmos world models are Cosmos Transfer-2, which enhances synthetic data generation from 3D simulation scenes or spatial control inputs, as well as a distilled version of Cosmos Transfers optimized for speed.

During the unveiling at the SIGGRAPH conference, Nvidia emphasized that these models are intended for creating synthetic text, image, and video datasets for training robots and AI agents.

Cosmos Reason stands out for its ability to enable robots and AI agents to “reason” by leveraging its memory and physics understanding. This capability allows it to serve as a planning model for determining the next steps for an embodied agent, making it useful for data curation, robot planning, and video analytics.

In addition to the new models, Nvidia introduced neural reconstruction libraries, including one for a rendering technique that enables developers to simulate the real world in 3D using sensor data. This rendering capability is being integrated into the open-source simulator CARLA, a popular platform for developers. Furthermore, there is an update to the Omniverse software development kit.

For robotics workflows, Nvidia unveiled new servers. The Nvidia RTX Pro Blackwell Server offers a unified architecture for robotic development workloads, while Nvidia DGX Cloud provides a cloud-based management platform.

These announcements reflect Nvidia’s strategic move into the robotics space as it explores new use cases for its AI GPUs beyond traditional AI data centers.

Techcrunch event

San Francisco

|

October 27-29, 2025

We’re constantly evolving, and your feedback is invaluable to us. Share your perspective and insights with JS by filling out this survey to help us improve.